My Portfolio

Colour is optional. Click on pictures to toggle it.

Search by tags

all| publication| theory| software| python| pytorch| natural language processing| logic programming|

all

MSc Thesis - Inductive Learning of Temporal Advice Formulae for Guiding Planners

2025-04-07 all, software, python, theory, logic programming

My thesis addresses the sample inefficiency and lack of transparency in reinforcement learning by proposing a method to learn temporal advice formulae that guide planning algorithms more effectively. Using linear temporal logic on finite traces, the approach unifies prior work on guiding planners and learning from traces, leveraging ILASP to inductively learn answer set programs from examples. My work investigates noisy, partially observable domains and automates the generation of advice, unlike other works. Experiments in two planning environments show that high-quality examples of agent behaviour can yield broadly applicable advice formulae.

Bridging statistical learning theory and dynamic epistemic logic

2024-06-05 all, theory, logic, learning theory

This paper establishes a connection between statistical learning theory (SLT) and formal learning theory (FLT). We use this insight to connect SLT and dynamic epistemic logic (DEL) models via the already established FLT and DEL bridge due to [Gierasimczuk, 2009]. Specifically, we demonstrate that the uniform convergence property of a hypothesis space implies the finite identifiability of its corresponding epistemic space which, in turn, can be modelled in DEL [Gierasimczuk, 2009]. This paper thus lays the foundations of an alternative way to introduce probabilistic reasoning into DEL, reason about statistical learning scenarios topologically, and investigate the epistemology of statistical learning theory.

Investigating the cross-lingual sharing mechanism of multilingual models through their subnetworks

2024-06-01 all, software, python, pytorch, natural language processing

We investigate to what extent computation is shared across languages in multilingual models. In particular, we prune language-specific subnetworks from a multilingual model and check how much overlap there is between subnetworks for different languages and how these subnetworks perform across other languages. We also measure the similarity of the hidden states of a multilingual model for parallel sentences in different languages. All of these experiments suggest that a substantial amount of computation is being shared across languages in the multilingual model we use (XLM-R).

Reproducibility Study of 'Learning Perturbations to Explain Time Series Predictions'

2024-05-18 all, publication, software, python, pytorch

In this work, we attempt to reproduce the results of Enguehard (2023), which introduced ExtremalMask, a mask-based perturbation method for explaining time series data. We investigated the key claims of this paper, namely that (1) the model outperformed other models in several key metrics on both synthetic and real data, and (2) the model performed better when using the loss function of the preservation game relative to that of the deletion game. Although discrepancies exist, our results generally support the core of the original paper’s conclusions. Next, we interpret ExtremalMask’s outputs using new visualizations and metrics and discuss the insights each interpretation provides. Finally, we test whether ExtremalMask create out of distribution samples, and found the model does not exhibit this flaw on our tested synthetic dataset. Overall, our results support and add nuance to the original paper’s findings.

InferSent sentence representations from scratch

2024-04-21 all, software, python, pytorch, natural language processing

This repository is a modular and extendable PyTorch Lightning re-implementation of InferSent sentence representations by Conneau et al., 2017. We train four types of sentence encoders - average, unidirectional LSTM, bidirectional LSTM, bidirectional LSTM with max pooling - via the natural language inference task using the SNLI dataset. This is done to create sentence representations which are general and transferable to other tasks. Refer to the paper for more information.

Causality study - how social networks influence one's decision to insure

2023-12-22 all, python, software, causality, dowhy, networkx

We look at an experiment that investigated how the social environment of rice farmers in rural China influences whether they purchase weather insurance, along with other variables such as demographics or whether they have previously adopted weather insurance.

Measuring and mitigating factual hallucinations for text summarization

2023-10-14 all, software, python, pytorch, natural language processing

Advancements in NLG have improved text generation quality but still suffer from hallucinations, which lead to irrelevant information and factual inconsistencies. This paper provides an ensemble of metrics that measure whether the generated text is factually correct. Using these metrics we find that fine-tuning is a fruitful hallucination mitigation approach whilst prompt engineering is not.

Towards counterfactual logics for machine learning

2023-08-29 all, theory, python, logic, machine learning

In this project, I have explored the connections between the informal, but practical, counterfactual explanation generation method and the formal semantic frameworks for counterfactuals. Specifically, I described a restricted notion of soundness and completeness and attempted to prove it for CE generation and variation semantics. I have succeeded in proving soundness but not completeness. Lastly, this paper provides ideas for further investigations in this area, and intuitions how completeness may be still achieved.

Topomodels in Haskell

2023-06-04 all, software, haskell, modal logic, topology

Repository Report Presentation

We provide a library for working with general topological spaces as well as topomodels for modal logic. We also implement a well-known construction for converting topomodels to S4 Kripke models and back. Furthermore, we developed correctness tests and benchmarks which are meant to be extended by users. Thus, this work serves as a solid starting point for investigating hypotheses about general topology, modal logic, and their intersection.

Domain theory and its topological connections

2023-02-01 all, theory, denotational semantics, lattice theory, topology

Researched and succinctly summarized an introduction to Domain Theory for MSc Logic students as part of our Topology In and Via Logic course. In the presentation we put some emphasis on the

BSc Thesis - Formal Verification of Deep Neural Networks for Sentiment Classification

2021-06-27 all, software, python, pytorch, natural language processing, julia, NeuralVerification.jl

Repository Thesis Presentation

I conducted research on verification, a technique that guarantees certain properties in neural networks. My focus was on the effectiveness of existing verification frameworks used in networks for sentiment classification, given the limited research in concrete NLP verification. Additionally, I explored the latent space attributes of various text representation methods. My findings reveal that the latent space created by an autoencoder, trained using a denoising adversarial objective, is effective for confirming the robustness of networks engaged in sentiment classification and for interpreting the outcomes of verification tools.

PySeidon - A Data-Driven Maritime Port Simulation Framework

2021-06-01 all, publication, software, python, geoplotlib, geojson, esper, fysom

Extendable and modular software for maritime port simulation. PySeidon can be used to explore the effects of a decision introduced in a port, perform scenario testing, approximate Key Performance Indicators of some decision, create new data for various downstream tasks (e.g. anomaly detection).

Software project born as part of MaRBLe 2.0.

Most representative song of course participants

2021-03-26 all, python, various music APIs (e.g. spotify), seaborn

We wanted to figure out the most representitive song of our course. As the primary dataset we used the YouTube music playlist that was shared among students attending the Data Analysis course. Everyone could freely edit it. We used many music APIs to craft a dataset that had interesting features of the songs appearing in the playlist such as tempo, key, danceability, etc. We explored the data and present our findings in the aforementioned video.

Aspect-Based Sentiment Analysis

2020-05-26 all, software, python, natural language processing, pytorch, tensorflow, textblob, spacy

I built a tool that analyzes the comments under a New York Times article for my Natural Language Processing course. Specifically, it finds aspects (topics) in a sentence and predicts the sentiment associated with them. This approach allows to get a more nuanced opinion regarding various sentiments mentioned in sentences, not just the average sentiment of the sentence.

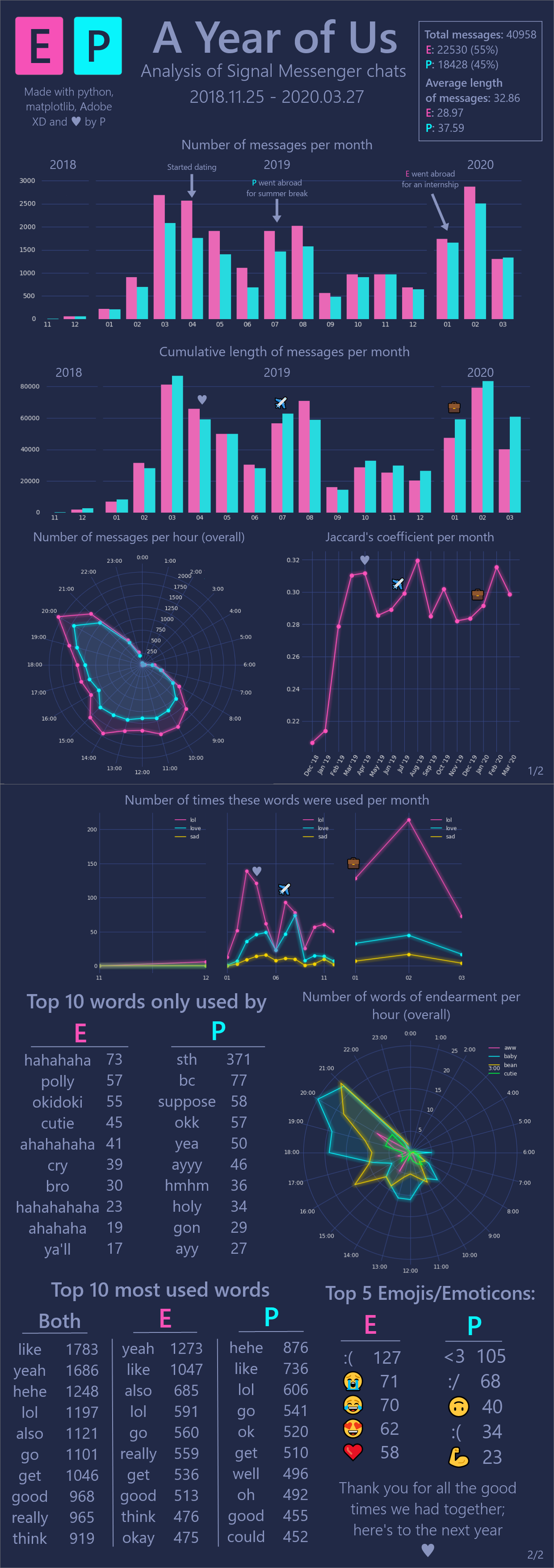

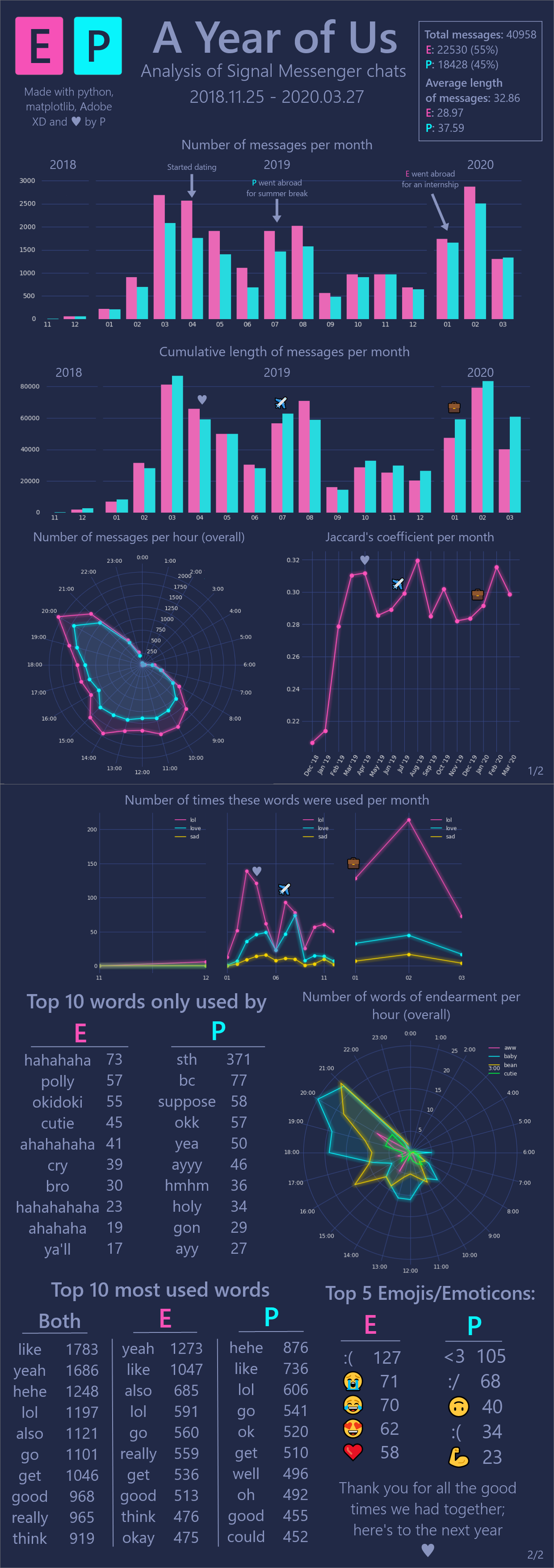

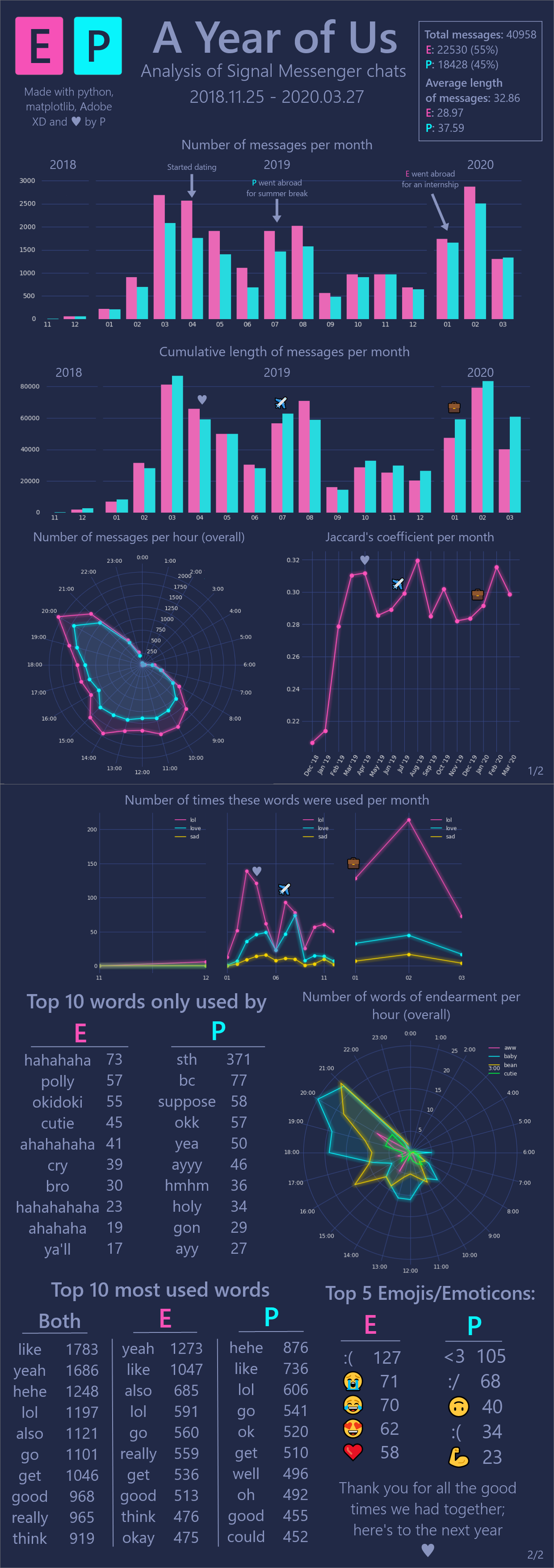

Analysis of Signal Messenger chat for relationship anniversary

2020-03-14 all, natural language processing, adobe xd, python, matplotlib, nltk

|

|---|

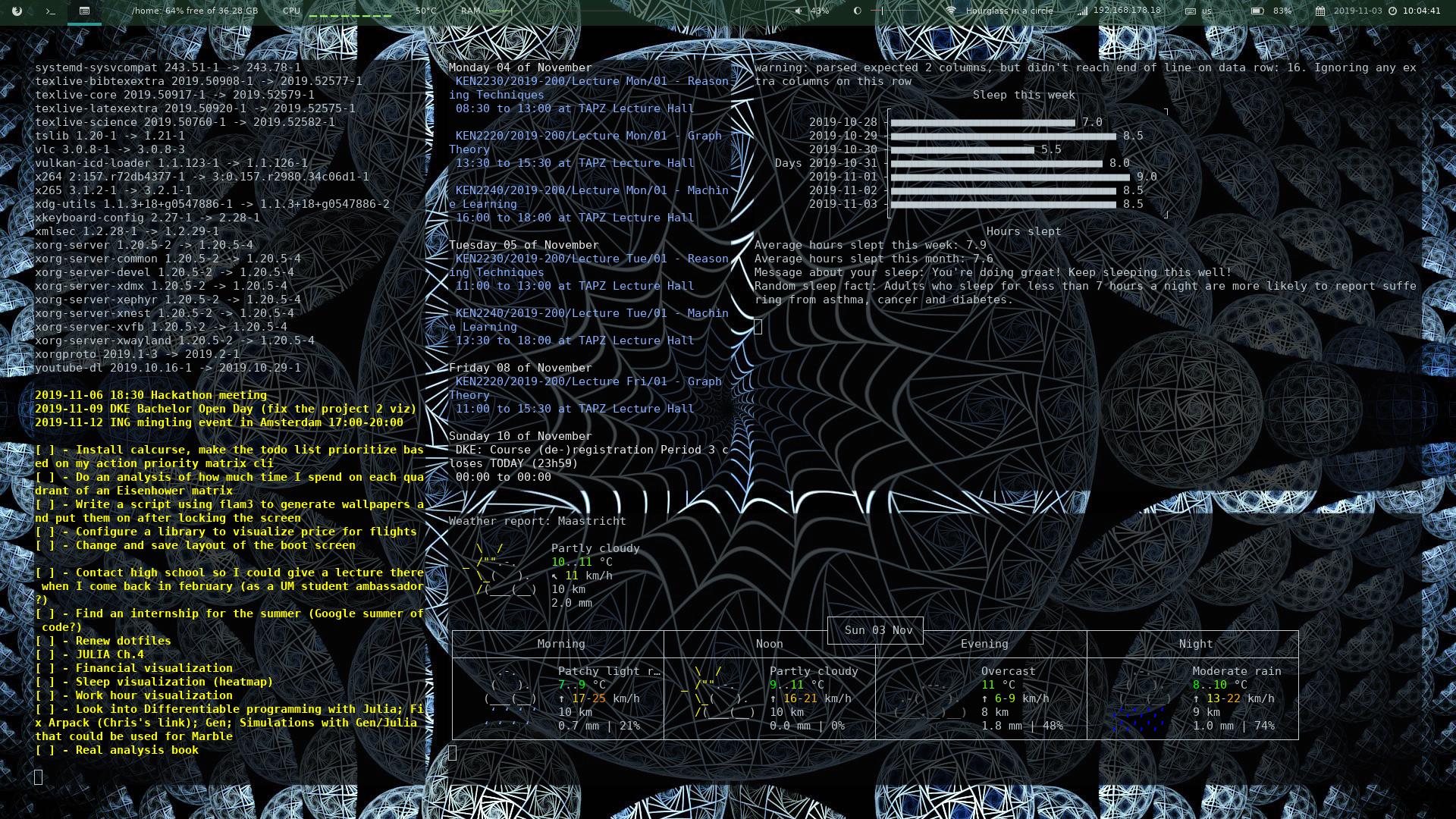

Scripts customizing my personal computer experience

2020-01-01 all, software

I use i3 window manager and spent quite a few hours making it look nice and comfy.

|

|---|

This little Julia script allows me to easily answer the question "How well am I sleeping?".

|

|

|---|

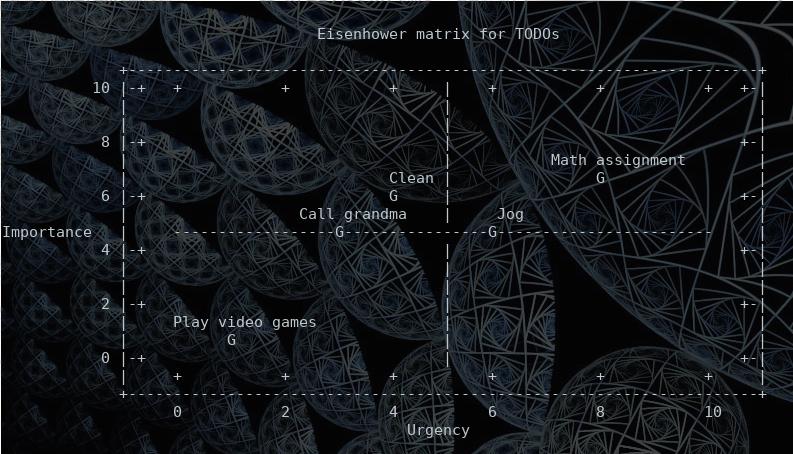

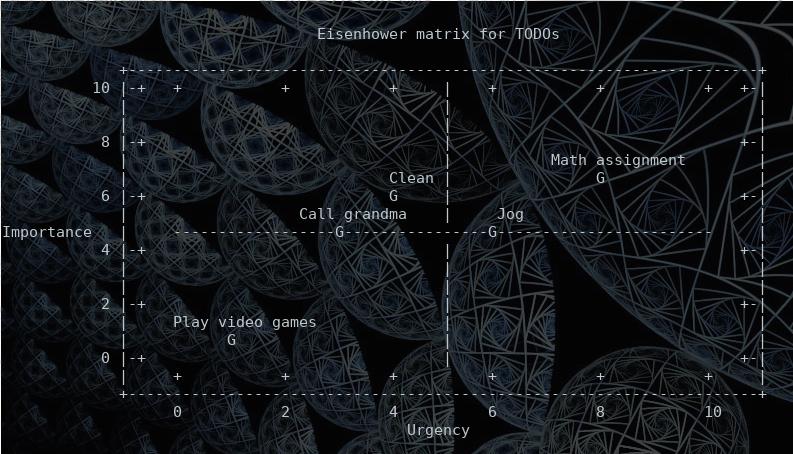

I like keeping track of the tasks I need to do and which ones are most important and urgent. I like it even more when that visualization is minimal and in the terminal.

|

|---|

publication

Reproducibility Study of 'Learning Perturbations to Explain Time Series Predictions'

2024-05-18 all, publication, software, python, pytorch

In this work, we attempt to reproduce the results of Enguehard (2023), which introduced ExtremalMask, a mask-based perturbation method for explaining time series data. We investigated the key claims of this paper, namely that (1) the model outperformed other models in several key metrics on both synthetic and real data, and (2) the model performed better when using the loss function of the preservation game relative to that of the deletion game. Although discrepancies exist, our results generally support the core of the original paper’s conclusions. Next, we interpret ExtremalMask’s outputs using new visualizations and metrics and discuss the insights each interpretation provides. Finally, we test whether ExtremalMask create out of distribution samples, and found the model does not exhibit this flaw on our tested synthetic dataset. Overall, our results support and add nuance to the original paper’s findings.

PySeidon - A Data-Driven Maritime Port Simulation Framework

2021-06-01 all, publication, software, python, geoplotlib, geojson, esper, fysom

Extendable and modular software for maritime port simulation. PySeidon can be used to explore the effects of a decision introduced in a port, perform scenario testing, approximate Key Performance Indicators of some decision, create new data for various downstream tasks (e.g. anomaly detection).

Software project born as part of MaRBLe 2.0.

theory

MSc Thesis - Inductive Learning of Temporal Advice Formulae for Guiding Planners

2025-04-07 all, software, python, theory, logic programming

My thesis addresses the sample inefficiency and lack of transparency in reinforcement learning by proposing a method to learn temporal advice formulae that guide planning algorithms more effectively. Using linear temporal logic on finite traces, the approach unifies prior work on guiding planners and learning from traces, leveraging ILASP to inductively learn answer set programs from examples. My work investigates noisy, partially observable domains and automates the generation of advice, unlike other works. Experiments in two planning environments show that high-quality examples of agent behaviour can yield broadly applicable advice formulae.

Bridging statistical learning theory and dynamic epistemic logic

2024-06-05 all, theory, logic, learning theory

This paper establishes a connection between statistical learning theory (SLT) and formal learning theory (FLT). We use this insight to connect SLT and dynamic epistemic logic (DEL) models via the already established FLT and DEL bridge due to [Gierasimczuk, 2009]. Specifically, we demonstrate that the uniform convergence property of a hypothesis space implies the finite identifiability of its corresponding epistemic space which, in turn, can be modelled in DEL [Gierasimczuk, 2009]. This paper thus lays the foundations of an alternative way to introduce probabilistic reasoning into DEL, reason about statistical learning scenarios topologically, and investigate the epistemology of statistical learning theory.

Towards counterfactual logics for machine learning

2023-08-29 all, theory, python, logic, machine learning

In this project, I have explored the connections between the informal, but practical, counterfactual explanation generation method and the formal semantic frameworks for counterfactuals. Specifically, I described a restricted notion of soundness and completeness and attempted to prove it for CE generation and variation semantics. I have succeeded in proving soundness but not completeness. Lastly, this paper provides ideas for further investigations in this area, and intuitions how completeness may be still achieved.

Domain theory and its topological connections

2023-02-01 all, theory, denotational semantics, lattice theory, topology

Researched and succinctly summarized an introduction to Domain Theory for MSc Logic students as part of our Topology In and Via Logic course. In the presentation we put some emphasis on the

software

MSc Thesis - Inductive Learning of Temporal Advice Formulae for Guiding Planners

2025-04-07 all, software, python, theory, logic programming

My thesis addresses the sample inefficiency and lack of transparency in reinforcement learning by proposing a method to learn temporal advice formulae that guide planning algorithms more effectively. Using linear temporal logic on finite traces, the approach unifies prior work on guiding planners and learning from traces, leveraging ILASP to inductively learn answer set programs from examples. My work investigates noisy, partially observable domains and automates the generation of advice, unlike other works. Experiments in two planning environments show that high-quality examples of agent behaviour can yield broadly applicable advice formulae.

Investigating the cross-lingual sharing mechanism of multilingual models through their subnetworks

2024-06-01 all, software, python, pytorch, natural language processing

We investigate to what extent computation is shared across languages in multilingual models. In particular, we prune language-specific subnetworks from a multilingual model and check how much overlap there is between subnetworks for different languages and how these subnetworks perform across other languages. We also measure the similarity of the hidden states of a multilingual model for parallel sentences in different languages. All of these experiments suggest that a substantial amount of computation is being shared across languages in the multilingual model we use (XLM-R).

Reproducibility Study of 'Learning Perturbations to Explain Time Series Predictions'

2024-05-18 all, publication, software, python, pytorch

In this work, we attempt to reproduce the results of Enguehard (2023), which introduced ExtremalMask, a mask-based perturbation method for explaining time series data. We investigated the key claims of this paper, namely that (1) the model outperformed other models in several key metrics on both synthetic and real data, and (2) the model performed better when using the loss function of the preservation game relative to that of the deletion game. Although discrepancies exist, our results generally support the core of the original paper’s conclusions. Next, we interpret ExtremalMask’s outputs using new visualizations and metrics and discuss the insights each interpretation provides. Finally, we test whether ExtremalMask create out of distribution samples, and found the model does not exhibit this flaw on our tested synthetic dataset. Overall, our results support and add nuance to the original paper’s findings.

InferSent sentence representations from scratch

2024-04-21 all, software, python, pytorch, natural language processing

This repository is a modular and extendable PyTorch Lightning re-implementation of InferSent sentence representations by Conneau et al., 2017. We train four types of sentence encoders - average, unidirectional LSTM, bidirectional LSTM, bidirectional LSTM with max pooling - via the natural language inference task using the SNLI dataset. This is done to create sentence representations which are general and transferable to other tasks. Refer to the paper for more information.

Causality study - how social networks influence one's decision to insure

2023-12-22 all, python, software, causality, dowhy, networkx

We look at an experiment that investigated how the social environment of rice farmers in rural China influences whether they purchase weather insurance, along with other variables such as demographics or whether they have previously adopted weather insurance.

Measuring and mitigating factual hallucinations for text summarization

2023-10-14 all, software, python, pytorch, natural language processing

Advancements in NLG have improved text generation quality but still suffer from hallucinations, which lead to irrelevant information and factual inconsistencies. This paper provides an ensemble of metrics that measure whether the generated text is factually correct. Using these metrics we find that fine-tuning is a fruitful hallucination mitigation approach whilst prompt engineering is not.

Topomodels in Haskell

2023-06-04 all, software, haskell, modal logic, topology

Repository Report Presentation

We provide a library for working with general topological spaces as well as topomodels for modal logic. We also implement a well-known construction for converting topomodels to S4 Kripke models and back. Furthermore, we developed correctness tests and benchmarks which are meant to be extended by users. Thus, this work serves as a solid starting point for investigating hypotheses about general topology, modal logic, and their intersection.

BSc Thesis - Formal Verification of Deep Neural Networks for Sentiment Classification

2021-06-27 all, software, python, pytorch, natural language processing, julia, NeuralVerification.jl

Repository Thesis Presentation

I conducted research on verification, a technique that guarantees certain properties in neural networks. My focus was on the effectiveness of existing verification frameworks used in networks for sentiment classification, given the limited research in concrete NLP verification. Additionally, I explored the latent space attributes of various text representation methods. My findings reveal that the latent space created by an autoencoder, trained using a denoising adversarial objective, is effective for confirming the robustness of networks engaged in sentiment classification and for interpreting the outcomes of verification tools.

PySeidon - A Data-Driven Maritime Port Simulation Framework

2021-06-01 all, publication, software, python, geoplotlib, geojson, esper, fysom

Extendable and modular software for maritime port simulation. PySeidon can be used to explore the effects of a decision introduced in a port, perform scenario testing, approximate Key Performance Indicators of some decision, create new data for various downstream tasks (e.g. anomaly detection).

Software project born as part of MaRBLe 2.0.

Aspect-Based Sentiment Analysis

2020-05-26 all, software, python, natural language processing, pytorch, tensorflow, textblob, spacy

I built a tool that analyzes the comments under a New York Times article for my Natural Language Processing course. Specifically, it finds aspects (topics) in a sentence and predicts the sentiment associated with them. This approach allows to get a more nuanced opinion regarding various sentiments mentioned in sentences, not just the average sentiment of the sentence.

Scripts customizing my personal computer experience

2020-01-01 all, software

I use i3 window manager and spent quite a few hours making it look nice and comfy.

|

|---|

This little Julia script allows me to easily answer the question "How well am I sleeping?".

|

|

|---|

I like keeping track of the tasks I need to do and which ones are most important and urgent. I like it even more when that visualization is minimal and in the terminal.

|

|---|

python

MSc Thesis - Inductive Learning of Temporal Advice Formulae for Guiding Planners

2025-04-07 all, software, python, theory, logic programming

My thesis addresses the sample inefficiency and lack of transparency in reinforcement learning by proposing a method to learn temporal advice formulae that guide planning algorithms more effectively. Using linear temporal logic on finite traces, the approach unifies prior work on guiding planners and learning from traces, leveraging ILASP to inductively learn answer set programs from examples. My work investigates noisy, partially observable domains and automates the generation of advice, unlike other works. Experiments in two planning environments show that high-quality examples of agent behaviour can yield broadly applicable advice formulae.

Investigating the cross-lingual sharing mechanism of multilingual models through their subnetworks

2024-06-01 all, software, python, pytorch, natural language processing

We investigate to what extent computation is shared across languages in multilingual models. In particular, we prune language-specific subnetworks from a multilingual model and check how much overlap there is between subnetworks for different languages and how these subnetworks perform across other languages. We also measure the similarity of the hidden states of a multilingual model for parallel sentences in different languages. All of these experiments suggest that a substantial amount of computation is being shared across languages in the multilingual model we use (XLM-R).

Reproducibility Study of 'Learning Perturbations to Explain Time Series Predictions'

2024-05-18 all, publication, software, python, pytorch

In this work, we attempt to reproduce the results of Enguehard (2023), which introduced ExtremalMask, a mask-based perturbation method for explaining time series data. We investigated the key claims of this paper, namely that (1) the model outperformed other models in several key metrics on both synthetic and real data, and (2) the model performed better when using the loss function of the preservation game relative to that of the deletion game. Although discrepancies exist, our results generally support the core of the original paper’s conclusions. Next, we interpret ExtremalMask’s outputs using new visualizations and metrics and discuss the insights each interpretation provides. Finally, we test whether ExtremalMask create out of distribution samples, and found the model does not exhibit this flaw on our tested synthetic dataset. Overall, our results support and add nuance to the original paper’s findings.

InferSent sentence representations from scratch

2024-04-21 all, software, python, pytorch, natural language processing

This repository is a modular and extendable PyTorch Lightning re-implementation of InferSent sentence representations by Conneau et al., 2017. We train four types of sentence encoders - average, unidirectional LSTM, bidirectional LSTM, bidirectional LSTM with max pooling - via the natural language inference task using the SNLI dataset. This is done to create sentence representations which are general and transferable to other tasks. Refer to the paper for more information.

Causality study - how social networks influence one's decision to insure

2023-12-22 all, python, software, causality, dowhy, networkx

We look at an experiment that investigated how the social environment of rice farmers in rural China influences whether they purchase weather insurance, along with other variables such as demographics or whether they have previously adopted weather insurance.

Measuring and mitigating factual hallucinations for text summarization

2023-10-14 all, software, python, pytorch, natural language processing

Advancements in NLG have improved text generation quality but still suffer from hallucinations, which lead to irrelevant information and factual inconsistencies. This paper provides an ensemble of metrics that measure whether the generated text is factually correct. Using these metrics we find that fine-tuning is a fruitful hallucination mitigation approach whilst prompt engineering is not.

Towards counterfactual logics for machine learning

2023-08-29 all, theory, python, logic, machine learning

In this project, I have explored the connections between the informal, but practical, counterfactual explanation generation method and the formal semantic frameworks for counterfactuals. Specifically, I described a restricted notion of soundness and completeness and attempted to prove it for CE generation and variation semantics. I have succeeded in proving soundness but not completeness. Lastly, this paper provides ideas for further investigations in this area, and intuitions how completeness may be still achieved.

BSc Thesis - Formal Verification of Deep Neural Networks for Sentiment Classification

2021-06-27 all, software, python, pytorch, natural language processing, julia, NeuralVerification.jl

Repository Thesis Presentation

I conducted research on verification, a technique that guarantees certain properties in neural networks. My focus was on the effectiveness of existing verification frameworks used in networks for sentiment classification, given the limited research in concrete NLP verification. Additionally, I explored the latent space attributes of various text representation methods. My findings reveal that the latent space created by an autoencoder, trained using a denoising adversarial objective, is effective for confirming the robustness of networks engaged in sentiment classification and for interpreting the outcomes of verification tools.

PySeidon - A Data-Driven Maritime Port Simulation Framework

2021-06-01 all, publication, software, python, geoplotlib, geojson, esper, fysom

Extendable and modular software for maritime port simulation. PySeidon can be used to explore the effects of a decision introduced in a port, perform scenario testing, approximate Key Performance Indicators of some decision, create new data for various downstream tasks (e.g. anomaly detection).

Software project born as part of MaRBLe 2.0.

Most representative song of course participants

2021-03-26 all, python, various music APIs (e.g. spotify), seaborn

We wanted to figure out the most representitive song of our course. As the primary dataset we used the YouTube music playlist that was shared among students attending the Data Analysis course. Everyone could freely edit it. We used many music APIs to craft a dataset that had interesting features of the songs appearing in the playlist such as tempo, key, danceability, etc. We explored the data and present our findings in the aforementioned video.

Aspect-Based Sentiment Analysis

2020-05-26 all, software, python, natural language processing, pytorch, tensorflow, textblob, spacy

I built a tool that analyzes the comments under a New York Times article for my Natural Language Processing course. Specifically, it finds aspects (topics) in a sentence and predicts the sentiment associated with them. This approach allows to get a more nuanced opinion regarding various sentiments mentioned in sentences, not just the average sentiment of the sentence.

Analysis of Signal Messenger chat for relationship anniversary

2020-03-14 all, natural language processing, adobe xd, python, matplotlib, nltk

|

|---|

pytorch

Investigating the cross-lingual sharing mechanism of multilingual models through their subnetworks

2024-06-01 all, software, python, pytorch, natural language processing

We investigate to what extent computation is shared across languages in multilingual models. In particular, we prune language-specific subnetworks from a multilingual model and check how much overlap there is between subnetworks for different languages and how these subnetworks perform across other languages. We also measure the similarity of the hidden states of a multilingual model for parallel sentences in different languages. All of these experiments suggest that a substantial amount of computation is being shared across languages in the multilingual model we use (XLM-R).

Reproducibility Study of 'Learning Perturbations to Explain Time Series Predictions'

2024-05-18 all, publication, software, python, pytorch

In this work, we attempt to reproduce the results of Enguehard (2023), which introduced ExtremalMask, a mask-based perturbation method for explaining time series data. We investigated the key claims of this paper, namely that (1) the model outperformed other models in several key metrics on both synthetic and real data, and (2) the model performed better when using the loss function of the preservation game relative to that of the deletion game. Although discrepancies exist, our results generally support the core of the original paper’s conclusions. Next, we interpret ExtremalMask’s outputs using new visualizations and metrics and discuss the insights each interpretation provides. Finally, we test whether ExtremalMask create out of distribution samples, and found the model does not exhibit this flaw on our tested synthetic dataset. Overall, our results support and add nuance to the original paper’s findings.

InferSent sentence representations from scratch

2024-04-21 all, software, python, pytorch, natural language processing

This repository is a modular and extendable PyTorch Lightning re-implementation of InferSent sentence representations by Conneau et al., 2017. We train four types of sentence encoders - average, unidirectional LSTM, bidirectional LSTM, bidirectional LSTM with max pooling - via the natural language inference task using the SNLI dataset. This is done to create sentence representations which are general and transferable to other tasks. Refer to the paper for more information.

Measuring and mitigating factual hallucinations for text summarization

2023-10-14 all, software, python, pytorch, natural language processing

Advancements in NLG have improved text generation quality but still suffer from hallucinations, which lead to irrelevant information and factual inconsistencies. This paper provides an ensemble of metrics that measure whether the generated text is factually correct. Using these metrics we find that fine-tuning is a fruitful hallucination mitigation approach whilst prompt engineering is not.

BSc Thesis - Formal Verification of Deep Neural Networks for Sentiment Classification

2021-06-27 all, software, python, pytorch, natural language processing, julia, NeuralVerification.jl

Repository Thesis Presentation

I conducted research on verification, a technique that guarantees certain properties in neural networks. My focus was on the effectiveness of existing verification frameworks used in networks for sentiment classification, given the limited research in concrete NLP verification. Additionally, I explored the latent space attributes of various text representation methods. My findings reveal that the latent space created by an autoencoder, trained using a denoising adversarial objective, is effective for confirming the robustness of networks engaged in sentiment classification and for interpreting the outcomes of verification tools.

Aspect-Based Sentiment Analysis

2020-05-26 all, software, python, natural language processing, pytorch, tensorflow, textblob, spacy

I built a tool that analyzes the comments under a New York Times article for my Natural Language Processing course. Specifically, it finds aspects (topics) in a sentence and predicts the sentiment associated with them. This approach allows to get a more nuanced opinion regarding various sentiments mentioned in sentences, not just the average sentiment of the sentence.

natural language processing

Investigating the cross-lingual sharing mechanism of multilingual models through their subnetworks

2024-06-01 all, software, python, pytorch, natural language processing

We investigate to what extent computation is shared across languages in multilingual models. In particular, we prune language-specific subnetworks from a multilingual model and check how much overlap there is between subnetworks for different languages and how these subnetworks perform across other languages. We also measure the similarity of the hidden states of a multilingual model for parallel sentences in different languages. All of these experiments suggest that a substantial amount of computation is being shared across languages in the multilingual model we use (XLM-R).

InferSent sentence representations from scratch

2024-04-21 all, software, python, pytorch, natural language processing

This repository is a modular and extendable PyTorch Lightning re-implementation of InferSent sentence representations by Conneau et al., 2017. We train four types of sentence encoders - average, unidirectional LSTM, bidirectional LSTM, bidirectional LSTM with max pooling - via the natural language inference task using the SNLI dataset. This is done to create sentence representations which are general and transferable to other tasks. Refer to the paper for more information.

Measuring and mitigating factual hallucinations for text summarization

2023-10-14 all, software, python, pytorch, natural language processing

Advancements in NLG have improved text generation quality but still suffer from hallucinations, which lead to irrelevant information and factual inconsistencies. This paper provides an ensemble of metrics that measure whether the generated text is factually correct. Using these metrics we find that fine-tuning is a fruitful hallucination mitigation approach whilst prompt engineering is not.

BSc Thesis - Formal Verification of Deep Neural Networks for Sentiment Classification

2021-06-27 all, software, python, pytorch, natural language processing, julia, NeuralVerification.jl

Repository Thesis Presentation

I conducted research on verification, a technique that guarantees certain properties in neural networks. My focus was on the effectiveness of existing verification frameworks used in networks for sentiment classification, given the limited research in concrete NLP verification. Additionally, I explored the latent space attributes of various text representation methods. My findings reveal that the latent space created by an autoencoder, trained using a denoising adversarial objective, is effective for confirming the robustness of networks engaged in sentiment classification and for interpreting the outcomes of verification tools.

Aspect-Based Sentiment Analysis

2020-05-26 all, software, python, natural language processing, pytorch, tensorflow, textblob, spacy

I built a tool that analyzes the comments under a New York Times article for my Natural Language Processing course. Specifically, it finds aspects (topics) in a sentence and predicts the sentiment associated with them. This approach allows to get a more nuanced opinion regarding various sentiments mentioned in sentences, not just the average sentiment of the sentence.

Analysis of Signal Messenger chat for relationship anniversary

2020-03-14 all, natural language processing, adobe xd, python, matplotlib, nltk

|

|---|

logic programming

MSc Thesis - Inductive Learning of Temporal Advice Formulae for Guiding Planners

2025-04-07 all, software, python, theory, logic programming

My thesis addresses the sample inefficiency and lack of transparency in reinforcement learning by proposing a method to learn temporal advice formulae that guide planning algorithms more effectively. Using linear temporal logic on finite traces, the approach unifies prior work on guiding planners and learning from traces, leveraging ILASP to inductively learn answer set programs from examples. My work investigates noisy, partially observable domains and automates the generation of advice, unlike other works. Experiments in two planning environments show that high-quality examples of agent behaviour can yield broadly applicable advice formulae.